Master Thesis - Paper reviews 02

Perceptual Losses for Real-Time Style Transfer and Super-Resolution

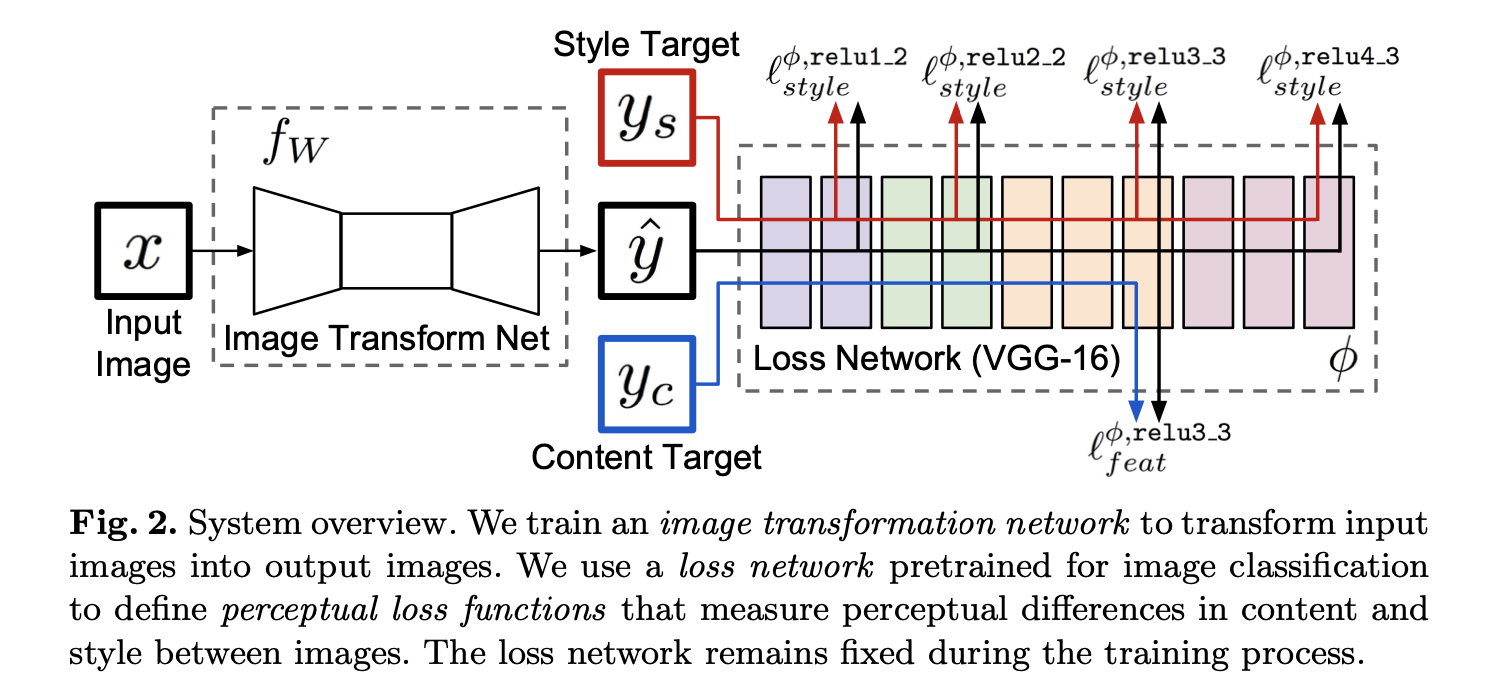

- Losses calculated from VGG network layers

- To calculate losses, both Generated image’s & HR image’s response outputs calculated at different layers of VGG. (this network weights are freeze)

Fig.1: General Architecture

- For SR, input image is resized by reciprocal scale(1/s). Input size should be 3x(288/s)x(288/s)

-

Output size is 3x288x288

- Input HR image random cropped with size 3x288x288

-

Degradation function(bucubic downsampling) applied to each HR patch.

- No pooling Layer In this design

-

For Upsampling convolutional layers with fractional stride is used

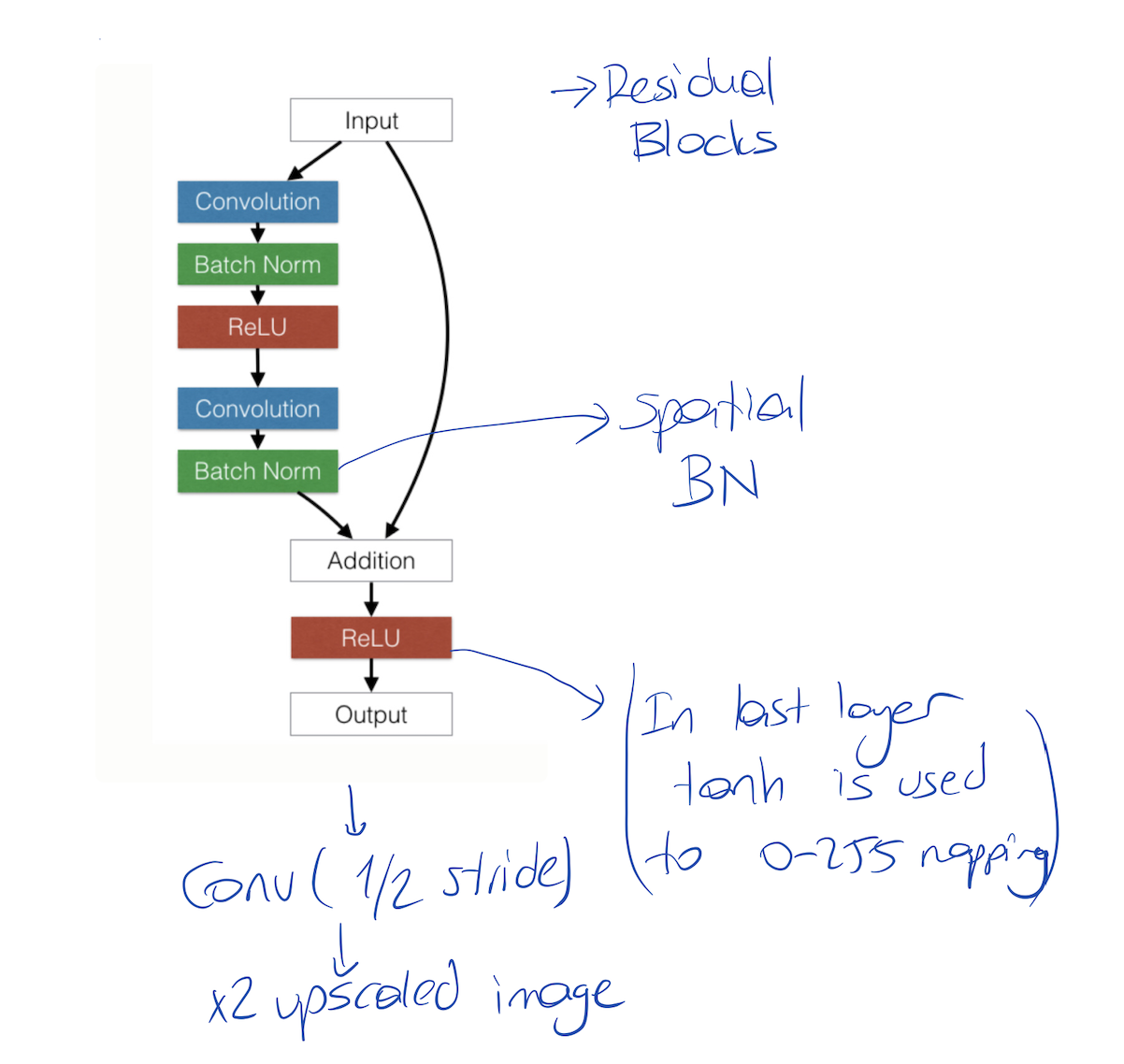

- There are 5 residual blocks. Each blocks consist of simple convolutional, batch Normalization, and Relu blocks. In figure 1, sample residual block can be seen.

Fig.2: residual block design

-

Some residual blocks is followed by convolutional layer with 1/2 stride. (It equals to x2 upscale) There should be $log_2s$ number of the fractional convolution layer totally.

-

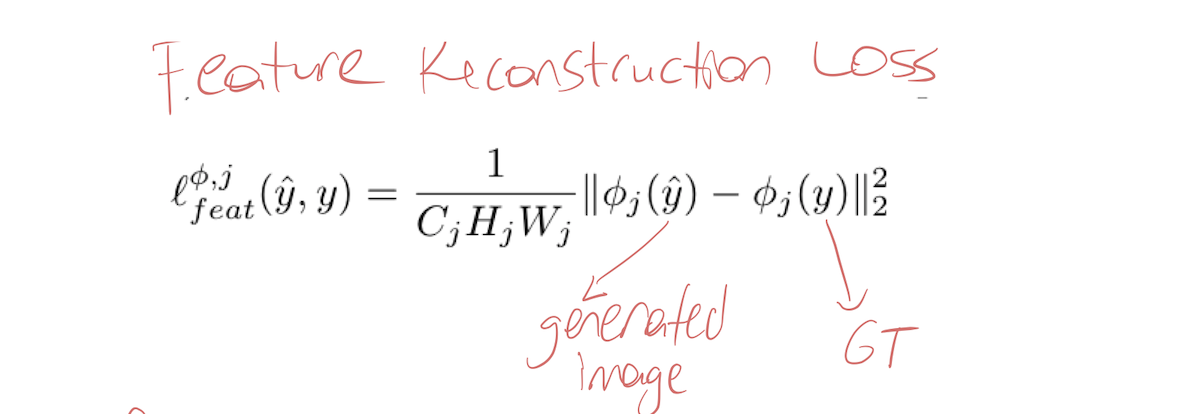

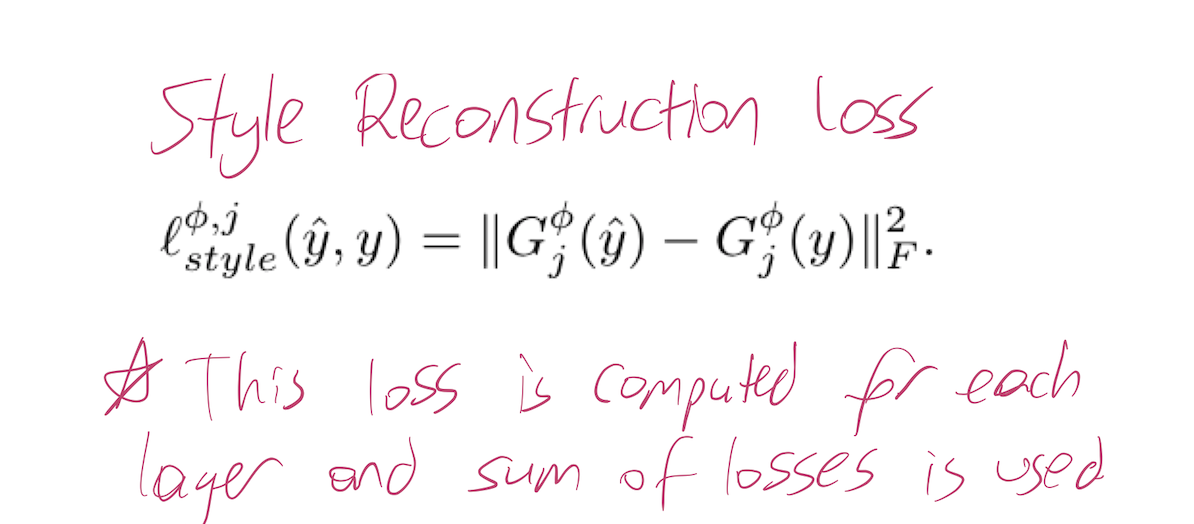

There are 2 type loss function exists: Feature reconstruction loss (FRL) & Style reconstruction loss (SRL). In SR, only FRL is used.

-

FRL is calculated mean square error between HR image’s and generated image’s relu2_2 responses.

Fig.3: Feature reconstruction loss (FRL)

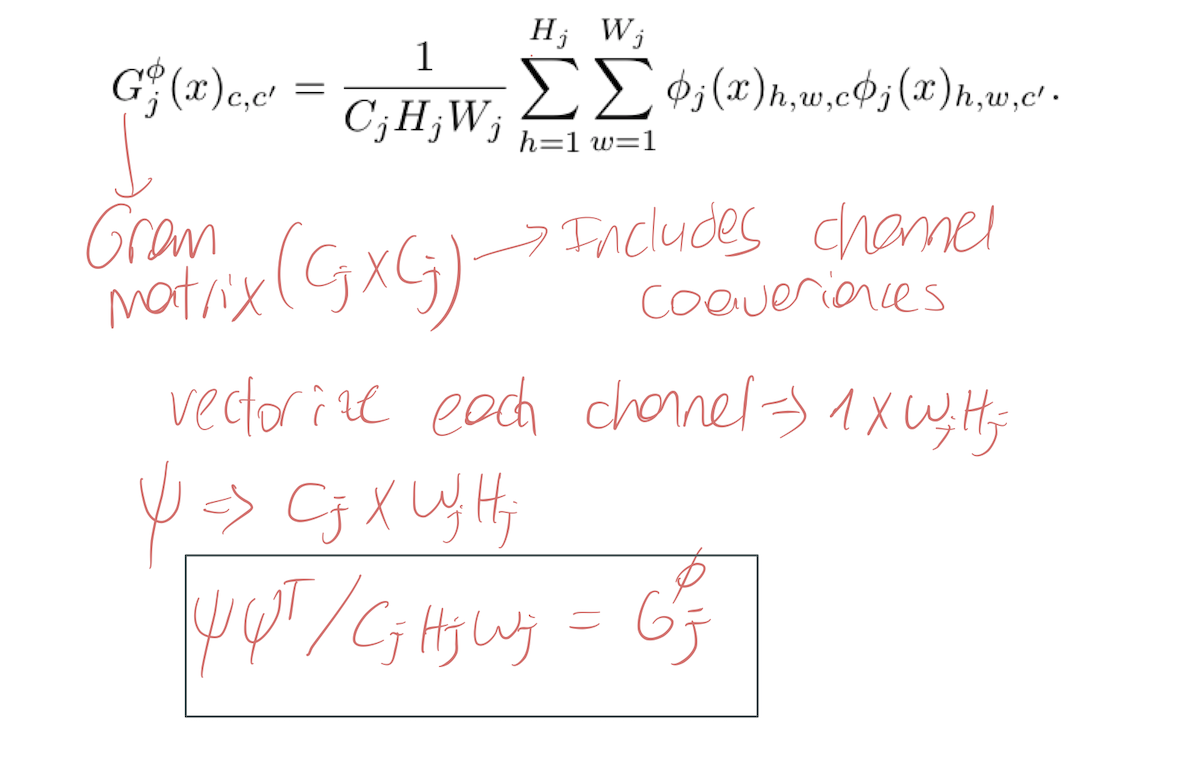

- SRL is calculated as stated in figure 4 and 5.

Fig.4: Style reconstruction loss- Gram matrix calculation

Fig.5: Style reconstruction loss